Trajectory Modeling

Cross-modal learning has shown promising potential to overcome the limitations of single-modality tasks. However, without a proper design of representation alignment between different data sources, the external modality has no way to exhibit its value.

We find that recent trajectory prediction approaches use Bird's-Eye-View (BEV) scene as additional source, but do not significantly improve the performance compared to the single-source strategies. This indicates that the representation of BEV scene and trajectory is not effectively combined.

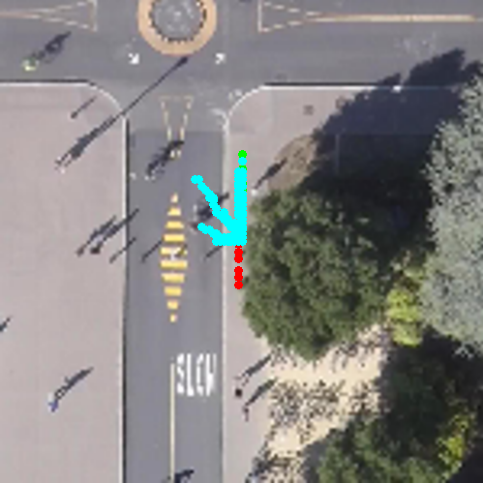

To overcome this problem, we propose TrajPrompt, a prompt-based approach that seamlessly incorporates trajectory representation into the vision-language framework, i.e. CLIP, for BEV scene understanding and future forecasting. We discover that CLIP can attend to the local area of BEV scene by utilizing our innovative design of text prompt and colored lines. Comprehensive results demonstrate TrajPrompt's effectiveness via outperforming the state-of-the-art trajectory predictors by a significant margin (over 35% improvement for ADE and FDE metrics on SDD and DroneCrowd dataset), using fewer learnable parameters than the previous trajectory modeling approaches with scene information included.

| Method | Models without image information | Models with image information | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Social-GAN | PECNet | NSP-SFM | Leapfrog | FlowChain | TUTR | SOPHIE | Y-net | TDOR | TrajPrompt (ours) | |

| CVPR'18 | ECCV'20 | ECCV'22 | CVPR'23 | ICCV'23 | ICCV'23 | CVPR'19 | ICCV'21 | CVPR'22 | ECCV'24 | |

| ADE ↓ | 27.33 | 9.96 | 6.52 | 8.48 | 9.93 | 7.76 | 16.27 | 7.85 | 6.77 | (↧42%) 3.78 |

| FDE ↓ | 41.44 | 15.88 | 10.61 | 11.66 | 17.17 | 12.69 | 29.38 | 11.85 | 10.46 | (↧35%) 6.81 |

| Params(M) | 1.75 | 2.10 | 0.64 | 11.20 | 1.63 | 0.11 | 26.23 | 1.64 | 0.85 | 0.17 |

Performance comparisons on SDD dataset (Evaluated in pixel). All the results are evaluated using 20 samplings, and Params stands for the amount of trainable parameters. The red arrows show the improvement of TrajPrompt compared to the best among SOTAs (highlighted as underline).

Start Frame

End Frame

@inproceedings{tsao2024trajprompt

author = {Tsao, Li-Wu and Tsui, Hao-Tang and Tuan, Yu-Rou and Chen, Pei-Chi and Wang, Kuan-Lin and Wu, Jhih-Ciang and Shuai, Hong-Han and Cheng, Wen-Huang},

title = {TrajPrompt: Aligning Color Trajectory with Vision-Language Representations},

journal = {ECCV},

year = {2024},

}